Facial expression is a critical step in Roblox’s march towards making the metaverse a part of people’s daily lives through natural and believable avatar interactions. However, animating virtual 3D character faces in real time is an enormous technical challenge. Despite numerous research breakthroughs, there are limited commercial examples of real-time facial animation applications. This is particularly challenging at Roblox, where we support a dizzying array of user devices, real-world conditions, and wildly creative use cases from our developers.

In this post, we will describe a deep learning framework for regressing facial animation controls from video that both addresses these challenges and opens us up to a number of future opportunities. The framework described in this blog post was also presented as a talk at SIGGRAPH 2021.

Facial Animation

There are various options to control and animate a 3D face-rig. The one we use is called the Facial Action Coding System or FACS, which defines a set of controls (based on facial muscle placement) to deform the 3D face mesh. Despite being over 40 years old, FACS are still the de facto standard due to the FACS controls being intuitive and easily transferable between rigs. An example of a FACS rig being exercised can be seen below.

Method

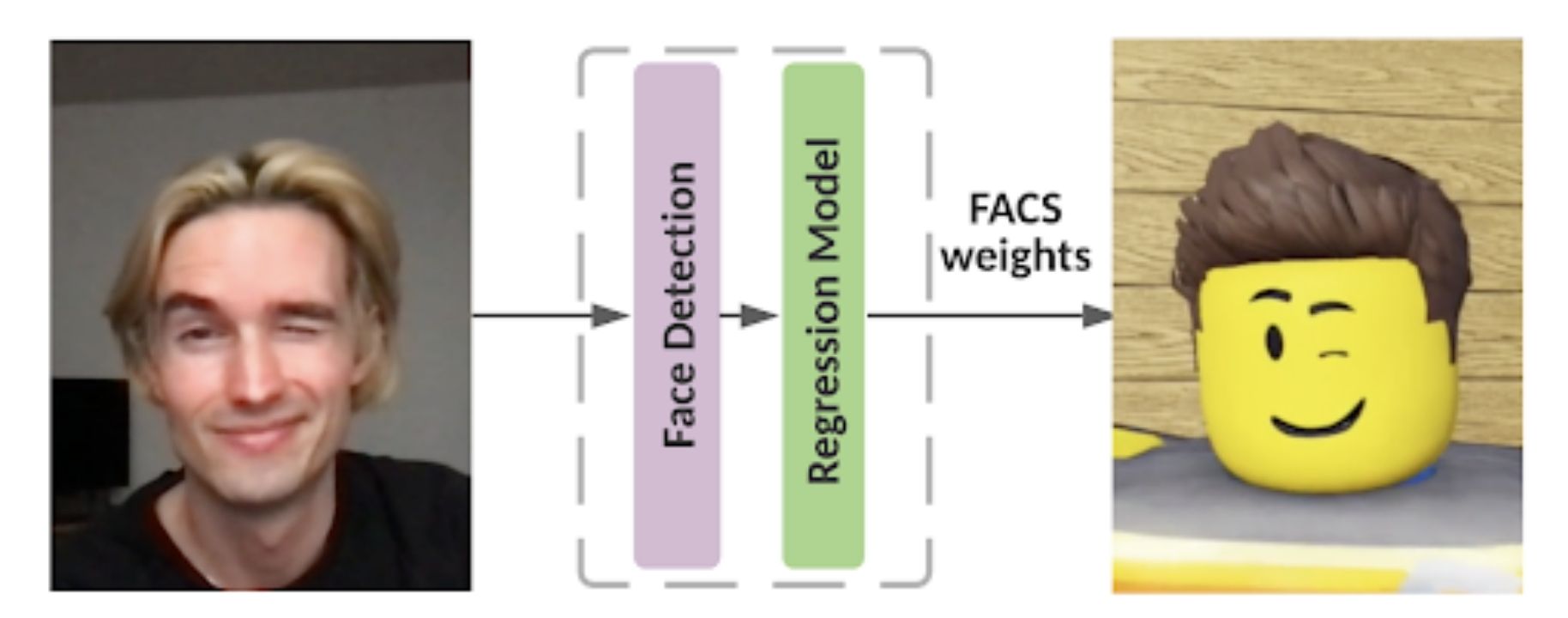

The idea is for our deep learning-based method to take a video as input and output a set of FACS for each frame. To achieve this, we use a two stage architecture: face detection and FACS regression.

Face Detection

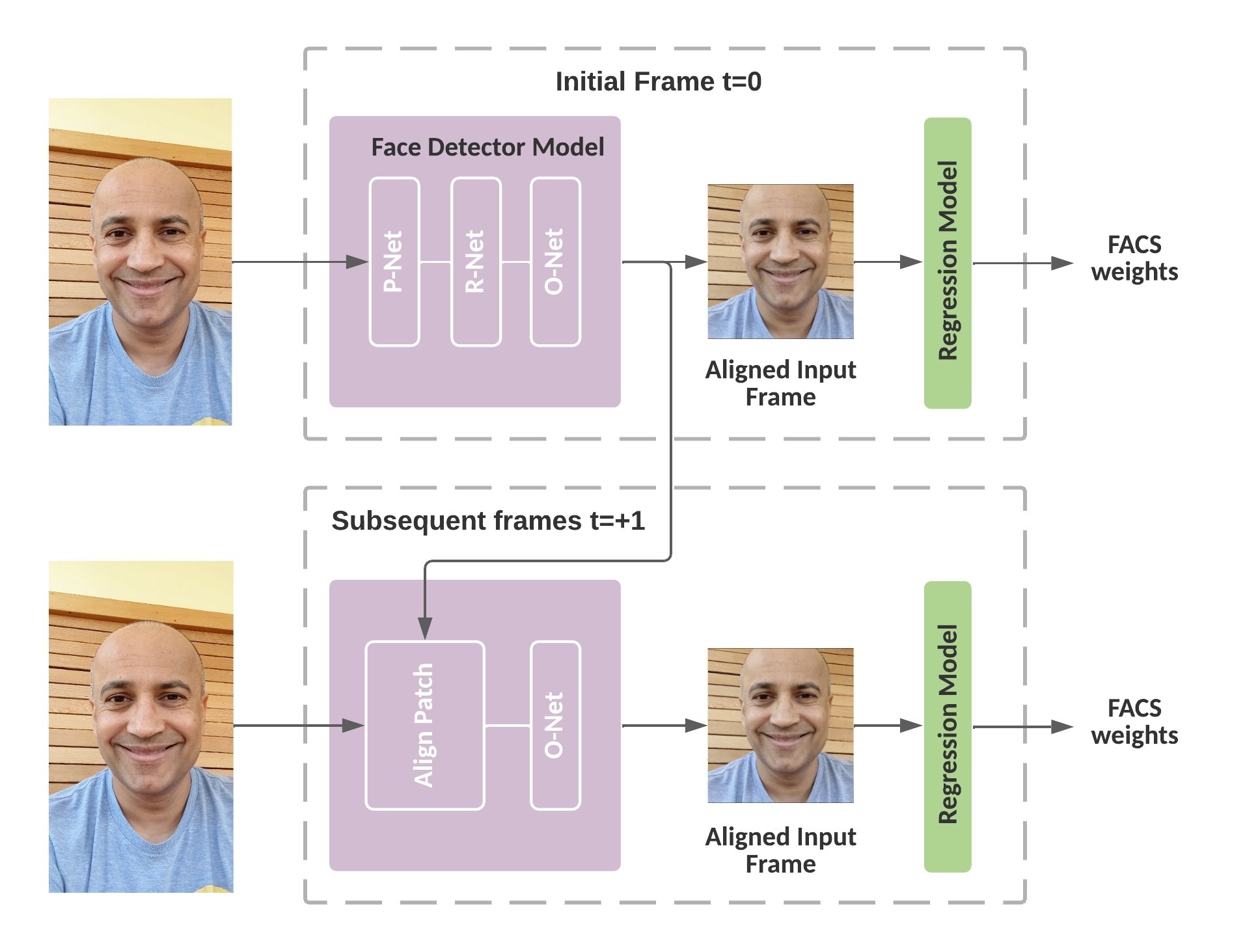

To achieve the best performance, we implement a fast variant of the relatively well known MTCNN face detection algorithm. The original MTCNN algorithm is quite accurate and fast but not fast enough to support real-time face detection on many of the devices used by our users. Thus to solve this we tweaked the algorithm for our specific use case where once a face is detected, our MTCNN implementation only runs the final O-Net stage in the successive frames, resulting in an average 10x speed-up. We also use the facial landmarks (location of eyes, nose, and mouth corners) predicted by MTCNN for aligning the face bounding box prior to the subsequent regression stage. This alignment allows for a tight crop of the input images, reducing the computation of the FACS regression network.

FACS Regression

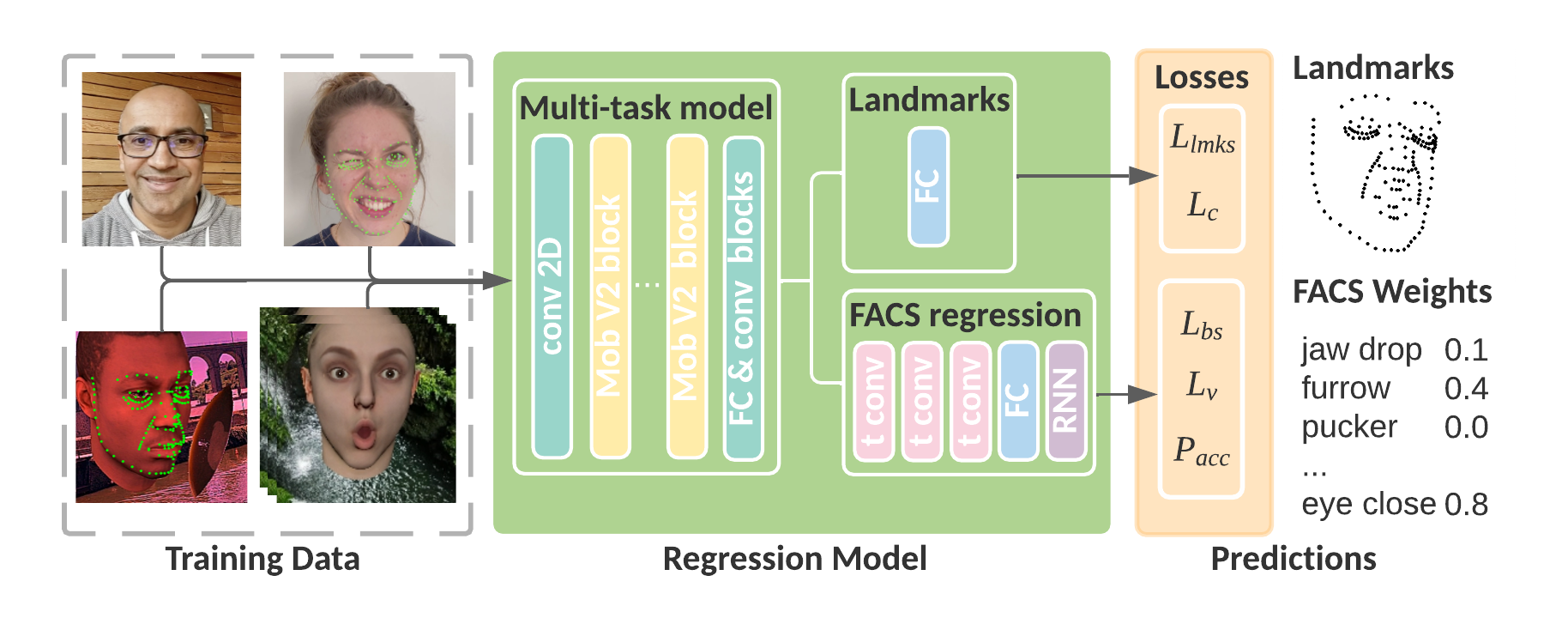

Our FACS regression architecture uses a multitask setup which co-trains landmarks and FACS weights using a shared backbone (known as the encoder) as feature extractor.

This setup allows us to augment the FACS weights learned from synthetic animation sequences with real images that capture the subtleties of facial expression. The FACS regression sub-network that is trained alongside the landmarks regressor uses causal convolutions; these convolutions operate on features over time as opposed to convolutions that only operate on spatial features as can be found in the encoder. This allows the model to learn temporal aspects of facial animations and makes it less sensitive to inconsistencies such as jitter.

Training

We initially train the model for only landmark regression using both real and synthetic images. After a certain number of steps we start adding synthetic sequences to learn the weights for the temporal FACS regression subnetwork. The synthetic animation sequences were created by our interdisciplinary team of artists and engineers. A normalized rig used for all the different identities (face meshes) was set up by our artist which was exercised and rendered automatically using animation files containing FACS weights. These animation files were generated using classic computer vision algorithms running on face-calisthenics video sequences and supplemented with hand-animated sequences for extreme facial expressions that were missing from the calisthenic videos.

Losses

To train our deep learning network, we linearly combine several different loss terms to regress landmarks and FACS weights:

- Positional Losses. For landmarks, the RMSE of the regressed positions (Llmks ), and for FACS weights, the MSE (Lfacs ).

- Temporal Losses. For FACS weights, we reduce jitter using temporal losses over synthetic animation sequences. A velocity loss (Lv ) inspired by [Cudeiro et al. 2019] is the MSE between the target and predicted velocities. It encourages overall smoothness of dynamic expressions. In addition, a regularization term on the acceleration (Lacc ) is added to reduce FACS weights jitter (its weight kept low to preserve responsiveness).

- Consistency Loss. We utilize real images without annotations in an unsupervised consistency loss (Lc ), similar to [Honari et al. 2018]. This encourages landmark predictions to be equivariant under different image transformations, improving landmark location consistency between frames without requiring landmark labels for a subset of the training images.

Performance

To improve the performance of the encoder without reducing accuracy or increasing jitter, we selectively used unpadded convolutions to decrease the feature map size. This gave us more control over the feature map sizes than would strided convolutions. To maintain the residual, we slice the feature map before adding it to the output of an unpadded convolution. Additionally, we set the depth of the feature maps to a multiple of 8, for efficient memory use with vector instruction sets such as AVX and Neon FP16, and resulting in a 1.5x performance boost.

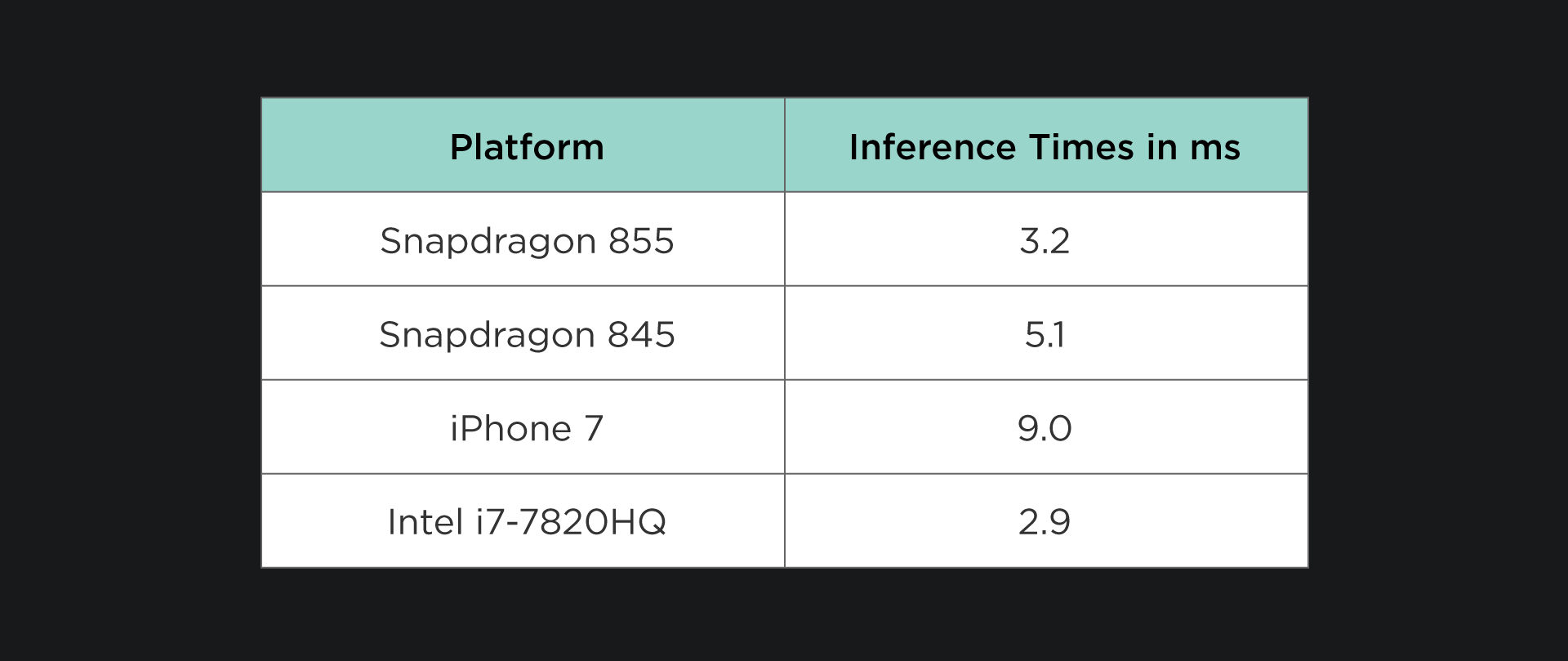

Our final model has 1.1 million parameters, and requires 28.1million multiply-accumulates to execute. For reference, vanilla Mobilenet V2 (which our architecture is based on) requires 300 million multiply-accumulates to execute. We use the NCNN framework for on-device model inference and the single threaded execution time(including face detection) for a frame of video are listed in the table below. Please note an execution time of 16ms would support processing 60 frames per second (FPS).

What’s Next

Our synthetic data pipeline allowed us to iteratively improve the expressivity and robustness of the trained model. We added synthetic sequences to improve responsiveness to missed expressions, and also balanced training across varied facial identities. We achieve high-quality animation with minimal computation because of the temporal formulation of our architecture and losses, a carefully optimized backbone, and error free ground-truth from the synthetic data. The temporal filtering carried out in the FACS weights subnetwork lets us reduce the number and size of layers in the backbone without increasing jitter. The unsupervised consistency loss lets us train with a large set of real data, improving the generalization and robustness of our model. We continue to work on further refining and improving our models, to get even more expressive, jitter-free, and robust results.

If you are interested in working on similar challenges at the forefront of real-time facial tracking and machine learning, please check out some of our open positions with our team.